Daniel Hoelbling-Inzko talks about programming

kaernten.at – Caching and Lazy loading

In my last post on the technology behind www.kaernten.at, I concluded with the fairly bold statement that all objects are kept in memory to ease querying.

That’s not really true, but also not really false.

When the article list is loaded initially, only a slim index of all articles is retrieved from the database. All information relevant to querying and sorting (id, tags, publication date, visibility) gets fetched at once. I used Castle DynamicProxy2 to create proxy objects that pose a the real thing to lazy load the article data on access. So although there may be 10.000 articles currently in memory, only those that have really been viewed by a user have been fully loaded, while all query criteria are present in memory.

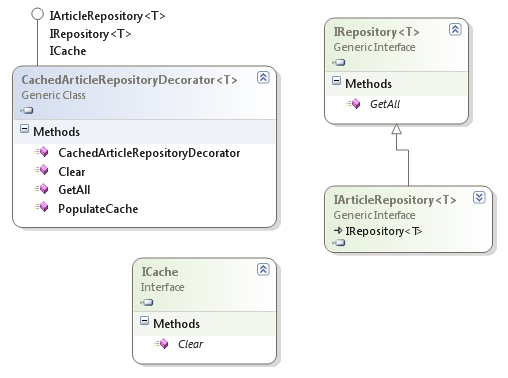

But lazy loading wouldn’t work if the list of proxy objects wouldn’t get cached somewhere, and that’s what I wanted to go over in this post. All articles retrieved (in their proxied form) come from the generic IRepository<T> interface that only has one method: GetAll(). I wanted to avoid having the caching be tied into the Repository at all costs since it would make my life hell when testing the repository itself. So I decided that the best course of action would be to create a decorator that took care of the caching, separating caching from the Repository implementation (and it’s also really easy if there is only one method to implement):

Switching caching on or off is now as simple as removing/adding one line to the Windsor configuration. And the caching code is shared among all Repository implementations, for any type of IArticleRepository<T>.

If it needs to get cleared I can simply ask the Windsor container to retrieve all ICache implementing classes (they are singletons) and call ICache.Clear() on them.

Now, the most interesting thing here is that the cache is asynchronous. If it gets cleared, a background worker process gets spawned on the PopulateCache() method, generating the updated data from the system, while subsequent requests to the Repository (while it’s being populated) still return the outdated list of data. Once the background worker is done populating, it simply swaps out the references and the old data gets collected by the garbage collector.

This result in some pretty sweet response times, since except for the very first startup of the system, every request is served directly from memory, without any data fetching at all.

This part was also crucial to the whole thing since fetching the data is a rather long running and cpu heavy task, taking up to 60 seconds while testing it against 10.000 articles (with a debugger attached on my laptop).

Initially I tried to speed the querying and object building up to allow for a synchronous cache refill, but although I did some optimizations (like not using some LinQ methods) the attempt to reduce the execution time to something acceptable was futile.

Right now the cache is implemented by just a simple List<T> that stores everything the repository returned, but if need arises it can easily be changed to use memcached or some other more sophisticated caching method.