One of the main advantages students get from being members of imagineClub is that they get access to uploaded course materials through the website. Naturally, the new site has to support file upload and download somehow, and yesterday I started implementation of that feature.

In theory this sounds really simple, especially since the file upload in MonoRail is so trivial I figured it wouldn’t be a problem to implement.

One major thing to consider when designing a file upload feature is the question: Save to disk or save to database? Let’s look at the two options:

Save to disk:

Pro: Very easy

Con: Requires metadata to be kept in the database. Could go out of sync with the db. Requires backup. Requires special permissions.

Save to db:

Pro: Zero setup. Data all in one place, backup hugely simplified. Enforces data integrity

Con: Non-trivial implementation.

Now, I naturally went with the db option. Deployment is hugely facilitated if you don’t need to look at file permissions, and most hosters have databases backed up anyway. So things go south, the only thing I need to recover the site would be the database file.

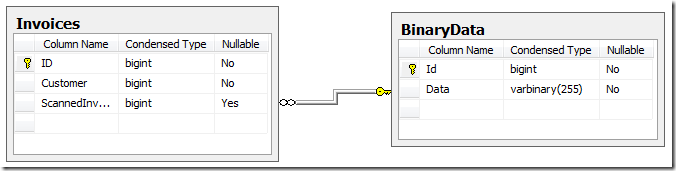

Some searching revealed that binary data could be mapped to the database through AR quite easily:

[Property(ColumnType = "BinaryBlob", SqlType = "varbinary(MAX)")]

public byte[] BinaryData { get; set; }

Problem with that is that it crashed ALL of my database dependant unit-tests:

------------ System.Data.SQLite.SQLiteException : SQLite error

near "MAX": syntax error

Apparently SqlLite can’t figure out that MAX thing and will crash. Since it would accept a numeric value instead I looked at the SqlServer 2008 documentation for varbinary to find out what MAX would be. Turns out it’s exaclty 2147483647 (2^31-1), so my natural reaction was to change the SqlType to be exactly varbinary(2147483647) instead of MAX. Now SqlLite can interpret it and all tests run great again, but creating the schema on SqlServer isn’t possible any more due to the following (odd) error:

The size (2147483647) given to the column 'BinaryData' exceeds the maximum allowed for any data type (8000).

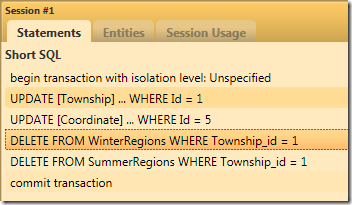

So, what we just saw is a leaky abstraction inside the ORM. But NHibernate never claimed to abstract the DB completely away from me, so we’ll not use that against it. NHibernate explicitly supports these scenarios and in a real NHibernate scenario it’s just a matter of having two different mapping files, one mapping to the appropriate SQLite datatype and the other mapping to the Sql2008 datatype that would be varbinary(MAX).

But, I’m not using NHibernate here, I’m using ActiveRecord that handles mapping through attributes on the data classes, and I’ve no intention of using #ifdef statements anywhere around my code.

The problem here is mainly that whenever you are trying to use two different RDBMS at once you are limiting yourself to the least common denominator, and you have to deal with that.

I won’t be able to use advanced Sql2008 features, and I also won’t be able to use anything fancy inside SQLite either.

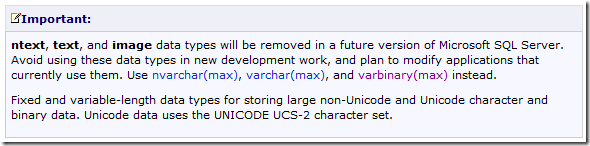

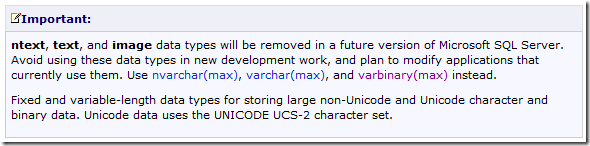

The least common denominator in this case is the datatype IMAGE, something that Microsoft is discouraging people to do in their documentation:

This puts me in a delicate position since the imagineClub website is hosted on a server I don’t control. So I could just wake up one morning and seeing the iC website down because the hosting company decided to upgrade all users to 2010 (or whatever version the next SQL Server will have).

And I know, usually providers send out warning for stuff like this, but I doubt that through all the structural changes with imagineClub lately they even know where to send those warnings to.

So: Long story short, use image over varbinary(MAX) if you plan on doing in-memory SQLite testing, just keep in mind that your app will break when you upgrade to a newer version of SqlServer.

Update: Looks like Krzysztof Kozmic had the same issues and found a quite clever solution for that. I’m not totally clear on how to do this with ActiveRecord, but it’s a very pragmatic approach to a problem that seems to not have a perfect solution anyway.